Amazon EKS

Overview

This service contains Terraform and Packer code to deploy a production-grade Kubernetes cluster on AWS using Elastic Kubernetes Service (EKS).

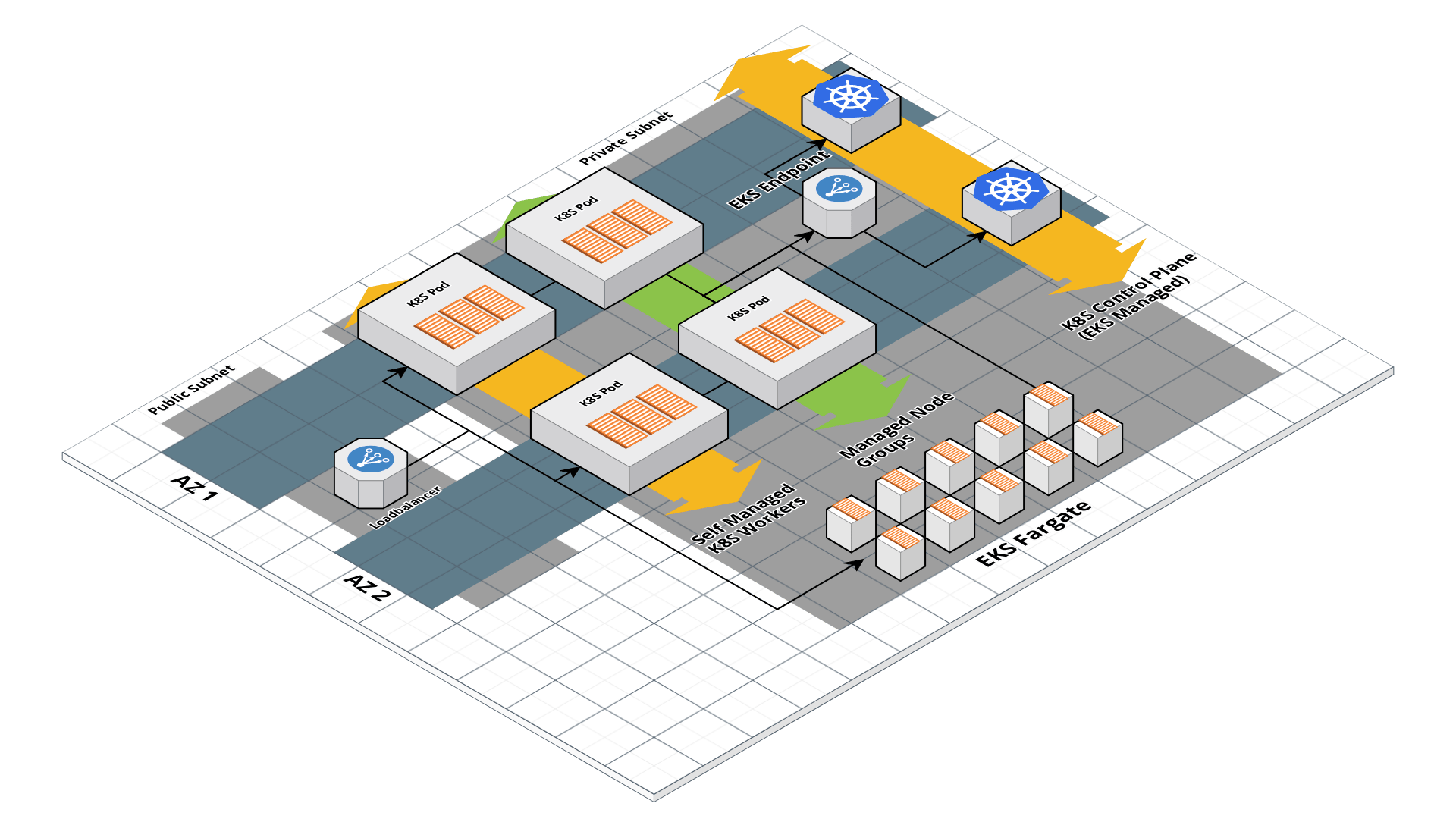

EKS architecture

EKS architecture

Features

- Deploy a fully-managed control plane

- Deploy worker nodes in an Auto Scaling Group

- Deploy Pods using Fargate instead of managing worker nodes

- Zero-downtime, rolling deployment for updating worker nodes

- IAM to RBAC mapping

- Auto scaling and auto healing

- For Self Managed and Managed Node Group Workers:

- Server-hardening with fail2ban, ip-lockdown, auto-update, and more

- Manage SSH access via IAM groups via ssh-grunt

- CloudWatch log aggregation

- CloudWatch metrics and alerts

Learn

This repo is a part of the Gruntwork Service Catalog, a collection of reusable, battle-tested, production ready infrastructure code. If you’ve never used the Service Catalog before, make sure to read How to use the Gruntwork Service Catalog!

Under the hood, this is all implemented using Terraform modules from the Gruntwork terraform-aws-eks repo. If you are a subscriber and don’t have access to this repo, email support@gruntwork.io.

Core concepts

To understand core concepts like what is Kubernetes, the different worker types, how to authenticate to Kubernetes, and more, see the documentation in the terraform-aws-eks repo.

Repo organization

- modules: the main implementation code for this repo, broken down into multiple standalone, orthogonal submodules.

- examples: This folder contains working examples of how to use the submodules.

- test: Automated tests for the modules and examples.

Deploy

Non-production deployment (quick start for learning)

If you just want to try this repo out for experimenting and learning, check out the following resources:

- examples/for-learning-and-testing folder: The

examples/for-learning-and-testingfolder contains standalone sample code optimized for learning, experimenting, and testing (but not direct production usage).

Production deployment

If you want to deploy this repo in production, check out the following resources:

-

examples/for-production folder: The

examples/for-productionfolder contains sample code optimized for direct usage in production. This is code from the Gruntwork Reference Architecture, and it shows you how we build an end-to-end, integrated tech stack on top of the Gruntwork Service Catalog. -

How to deploy a production-grade Kubernetes cluster on AWS: A step-by-step guide for deploying a production-grade EKS cluster on AWS using the code in this repo.

Manage

For information on how to manage your EKS cluster, including how to deploy Pods on Fargate, how to associate IAM roles to Pod, how to upgrade your EKS cluster, and more, see the documentation in the terraform-aws-eks repo.

To add and manage additional worker groups, refer to the eks-workers module.

Sample Usage

- Terraform

- Terragrunt

# ------------------------------------------------------------------------------------------------------

# DEPLOY GRUNTWORK'S EKS-CLUSTER MODULE

# ------------------------------------------------------------------------------------------------------

module "eks_cluster" {

source = "git::git@github.com:gruntwork-io/terraform-aws-service-catalog.git//modules/services/eks-cluster?ref=v0.127.2"

# ----------------------------------------------------------------------------------------------------

# REQUIRED VARIABLES

# ----------------------------------------------------------------------------------------------------

# The list of CIDR blocks to allow inbound access to the Kubernetes API.

allow_inbound_api_access_from_cidr_blocks = <list(string)>

# The name of the EKS cluster

cluster_name = <string>

# List of IDs of the subnets that can be used for the EKS Control Plane.

control_plane_vpc_subnet_ids = <list(string)>

# ID of the VPC where the EKS resources will be deployed.

vpc_id = <string>

# ----------------------------------------------------------------------------------------------------

# OPTIONAL VARIABLES

# ----------------------------------------------------------------------------------------------------

# The authentication mode for the cluster. Valid values are CONFIG_MAP, API or

# API_AND_CONFIG_MAP.

access_config_authentication_mode = "CONFIG_MAP"

# Map of EKS Access Entries to be created for the cluster.

access_entries = {}

# Map of EKS Access Entry Policy Associations to be created for the cluster.

access_entry_policy_associations = {}

# A list of additional security group IDs to attach to the control plane.

additional_security_groups_for_control_plane = []

# A list of additional security group IDs to attach to the worker nodes.

additional_security_groups_for_workers = []

# The ARNs of SNS topics where CloudWatch alarms (e.g., for CPU, memory, and

# disk space usage) should send notifications.

alarms_sns_topic_arn = []

# The list of CIDR blocks to allow inbound SSH access to the worker groups.

allow_inbound_ssh_from_cidr_blocks = []

# The list of security group IDs to allow inbound SSH access to the worker

# groups.

allow_inbound_ssh_from_security_groups = []

# The list of CIDR blocks to allow inbound access to the private Kubernetes

# API endpoint (e.g. the endpoint within the VPC, not the public endpoint).

allow_private_api_access_from_cidr_blocks = []

# The list of security groups to allow inbound access to the private

# Kubernetes API endpoint (e.g. the endpoint within the VPC, not the public

# endpoint).

allow_private_api_access_from_security_groups = []

# Default value for enable_detailed_monitoring field of

# autoscaling_group_configurations.

asg_default_enable_detailed_monitoring = true

# Default value for the http_put_response_hop_limit field of

# autoscaling_group_configurations.

asg_default_http_put_response_hop_limit = null

# Default value for the asg_instance_root_volume_encryption field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_encryption will use this value.

asg_default_instance_root_volume_encryption = true

# Default value for the asg_instance_root_volume_iops field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_iops will use this value.

asg_default_instance_root_volume_iops = null

# Default value for the asg_instance_root_volume_size field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_size will use this value.

asg_default_instance_root_volume_size = 40

# Default value for the asg_instance_root_volume_throughput field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_throughput will use this value.

asg_default_instance_root_volume_throughput = null

# Default value for the asg_instance_root_volume_type field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_type will use this value.

asg_default_instance_root_volume_type = "standard"

# Default value for the asg_instance_type field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_type will use this value.

asg_default_instance_type = "t3.medium"

# Default value for the max_pods_allowed field of

# autoscaling_group_configurations. Any map entry that does not specify

# max_pods_allowed will use this value.

asg_default_max_pods_allowed = null

# Default value for the max_size field of autoscaling_group_configurations.

# Any map entry that does not specify max_size will use this value.

asg_default_max_size = 2

# Default value for the min_size field of autoscaling_group_configurations.

# Any map entry that does not specify min_size will use this value.

asg_default_min_size = 1

# Default value for the multi_instance_overrides field of

# autoscaling_group_configurations. Any map entry that does not specify

# multi_instance_overrides will use this value.

asg_default_multi_instance_overrides = []

# Default value for the on_demand_allocation_strategy field of

# autoscaling_group_configurations. Any map entry that does not specify

# on_demand_allocation_strategy will use this value.

asg_default_on_demand_allocation_strategy = null

# Default value for the on_demand_base_capacity field of

# autoscaling_group_configurations. Any map entry that does not specify

# on_demand_base_capacity will use this value.

asg_default_on_demand_base_capacity = null

# Default value for the on_demand_percentage_above_base_capacity field of

# autoscaling_group_configurations. Any map entry that does not specify

# on_demand_percentage_above_base_capacity will use this value.

asg_default_on_demand_percentage_above_base_capacity = null

# Default value for the spot_allocation_strategy field of

# autoscaling_group_configurations. Any map entry that does not specify

# spot_allocation_strategy will use this value.

asg_default_spot_allocation_strategy = null

# Default value for the spot_instance_pools field of

# autoscaling_group_configurations. Any map entry that does not specify

# spot_instance_pools will use this value.

asg_default_spot_instance_pools = null

# Default value for the spot_max_price field of

# autoscaling_group_configurations. Any map entry that does not specify

# spot_max_price will use this value. Set to empty string (default) to mean

# on-demand price.

asg_default_spot_max_price = null

# Default value for the tags field of autoscaling_group_configurations. Any

# map entry that does not specify tags will use this value.

asg_default_tags = []

# Default value for the use_multi_instances_policy field of

# autoscaling_group_configurations. Any map entry that does not specify

# use_multi_instances_policy will use this value.

asg_default_use_multi_instances_policy = false

# Custom name for the IAM instance profile for the Self-managed workers. When

# null, the IAM role name will be used. If var.asg_use_resource_name_prefix is

# true, this will be used as a name prefix.

asg_iam_instance_profile_name = null

# ARN of a permission boundary to apply on the IAM role created for the self

# managed workers.

asg_iam_permissions_boundary = null

# A map of tags to apply to the Security Group of the ASG for the self managed

# worker pool. The key is the tag name and the value is the tag value.

asg_security_group_tags = {}

# When true, all the relevant resources for self managed workers will be set

# to use the name_prefix attribute so that unique names are generated for

# them. This allows those resources to support recreation through

# create_before_destroy lifecycle rules. Set to false if you were using any

# version before 0.65.0 and wish to avoid recreating the entire worker pool on

# your cluster.

asg_use_resource_name_prefix = true

# A map of custom tags to apply to the EKS Worker IAM Policies. The key is the

# tag name and the value is the tag value.

asg_worker_iam_policy_tags = {}

# A map of custom tags to apply to the EKS Worker IAM Role. The key is the tag

# name and the value is the tag value.

asg_worker_iam_role_tags = {}

# A map of custom tags to apply to the EKS Worker IAM Instance Profile. The

# key is the tag name and the value is the tag value.

asg_worker_instance_profile_tags = {}

# A map of custom tags to apply to the Fargate Profile if enabled. The key is

# the tag name and the value is the tag value.

auth_merger_eks_fargate_profile_tags = {}

# Configure one or more Auto Scaling Groups (ASGs) to manage the EC2 instances

# in this cluster. If any of the values are not provided, the specified

# default variable will be used to lookup a default value.

autoscaling_group_configurations = {}

# Adds additional tags to each ASG that allow a cluster autoscaler to

# auto-discover them.

autoscaling_group_include_autoscaler_discovery_tags = true

# Name of the default aws-auth ConfigMap to use. This will be the name of the

# ConfigMap that gets created by this module in the aws-auth-merger namespace

# to seed the initial aws-auth ConfigMap.

aws_auth_merger_default_configmap_name = "main-aws-auth"

# Location of the container image to use for the aws-auth-merger app. You can

# use the Dockerfile provided in terraform-aws-eks to construct an image. See

# https://github.com/gruntwork-io/terraform-aws-eks/blob/master/modules/eks-aws-auth-merger/core-concepts.md#how-do-i-use-the-aws-auth-merger

# for more info.

aws_auth_merger_image = null

# Namespace to deploy the aws-auth-merger into. The app will watch for

# ConfigMaps in this Namespace to merge into the aws-auth ConfigMap.

aws_auth_merger_namespace = "aws-auth-merger"

# Whether or not to bootstrap an access entry with cluster admin permissions

# for the cluster creator.

bootstrap_cluster_creator_admin_permissions = true

# Cloud init scripts to run on the EKS worker nodes when it is booting. See

# the part blocks in

# https://www.terraform.io/docs/providers/template/d/cloudinit_config.html for

# syntax. To override the default boot script installed as part of the module,

# use the key `default`.

cloud_init_parts = {}

# ARN of permissions boundary to apply to the cluster IAM role - the IAM role

# created for the EKS cluster.

cluster_iam_role_permissions_boundary = null

# The AMI to run on each instance in the EKS cluster. You can build the AMI

# using the Packer template eks-node-al2.json. One of var.cluster_instance_ami

# or var.cluster_instance_ami_filters is required. Only used if

# var.cluster_instance_ami_filters is null. Set to null if

# cluster_instance_ami_filters is set.

cluster_instance_ami = null

# Properties on the AMI that can be used to lookup a prebuilt AMI for use with

# self managed workers. You can build the AMI using the Packer template

# eks-node-al2.json. One of var.cluster_instance_ami or

# var.cluster_instance_ami_filters is required. If both are defined,

# var.cluster_instance_ami_filters will be used. Set to null if

# cluster_instance_ami is set.

cluster_instance_ami_filters = null

# Whether or not to associate a public IP address to the instances of the self

# managed ASGs. Will only work if the instances are launched in a public

# subnet.

cluster_instance_associate_public_ip_address = false

# The name of the Key Pair that can be used to SSH to each instance in the EKS

# cluster

cluster_instance_keypair_name = null

# The IP family used to assign Kubernetes pod and service addresses. Valid

# values are ipv4 (default) and ipv6. You can only specify an IP family when

# you create a cluster, changing this value will force a new cluster to be

# created.

cluster_network_config_ip_family = "ipv4"

# The CIDR block to assign Kubernetes pod and service IP addresses from. If

# you don't specify a block, Kubernetes assigns addresses from either the

# 10.100.0.0/16 or 172.20.0.0/16 CIDR blocks. You can only specify a custom

# CIDR block when you create a cluster, changing this value will force a new

# cluster to be created.

cluster_network_config_service_ipv4_cidr = null

# The ID (ARN, alias ARN, AWS ID) of a customer managed KMS Key to use for

# encrypting log data in the CloudWatch log group for EKS control plane logs.

control_plane_cloudwatch_log_group_kms_key_id = null

# The number of days to retain log events in the CloudWatch log group for EKS

# control plane logs. Refer to

# https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/cloudwatch_log_group#retention_in_days

# for all the valid values. When null, the log events are retained forever.

control_plane_cloudwatch_log_group_retention_in_days = null

# Tags to apply on the CloudWatch Log Group for EKS control plane logs,

# encoded as a map where the keys are tag keys and values are tag values.

control_plane_cloudwatch_log_group_tags = null

# A list of availability zones in the region that we CANNOT use to deploy the

# EKS control plane. You can use this to avoid availability zones that may not

# be able to provision the resources (e.g ran out of capacity). If empty, will

# allow all availability zones.

control_plane_disallowed_availability_zones = ["us-east-1e"]

# When true, IAM role will be created and attached to Fargate control plane

# services.

create_default_fargate_iam_role = true

# The name to use for the default Fargate execution IAM role that is created

# when create_default_fargate_iam_role is true. When null, defaults to

# CLUSTER_NAME-fargate-role.

custom_default_fargate_iam_role_name = null

# A map of custom tags to apply to the EKS add-ons. The key is the tag name

# and the value is the tag value.

custom_tags_eks_addons = {}

# A map of unique identifiers to egress security group rules to attach to the

# worker groups.

custom_worker_egress_security_group_rules = {}

# A map of unique identifiers to ingress security group rules to attach to the

# worker groups.

custom_worker_ingress_security_group_rules = {}

# Parameters for the worker cpu usage widget to output for use in a CloudWatch

# dashboard.

dashboard_cpu_usage_widget_parameters = {"height":6,"period":60,"width":8}

# Parameters for the worker disk usage widget to output for use in a

# CloudWatch dashboard.

dashboard_disk_usage_widget_parameters = {"height":6,"period":60,"width":8}

# Parameters for the worker memory usage widget to output for use in a

# CloudWatch dashboard.

dashboard_memory_usage_widget_parameters = {"height":6,"period":60,"width":8}

# A map of default tags to apply to all supported resources in this module.

# These tags will be merged with any other resource specific tags. The key is

# the tag name and the value is the tag value.

default_tags = {}

# Configuraiton object for the EBS CSI Driver EKS AddOn

ebs_csi_driver_addon_config = {}

# A map of custom tags to apply to the EBS CSI Driver AddOn. The key is the

# tag name and the value is the tag value.

ebs_csi_driver_addon_tags = {}

# A map of custom tags to apply to the IAM Policies created for the EBS CSI

# Driver IAM Role if enabled. The key is the tag name and the value is the tag

# value.

ebs_csi_driver_iam_policy_tags = {}

# A map of custom tags to apply to the EBS CSI Driver IAM Role if enabled. The

# key is the tag name and the value is the tag value.

ebs_csi_driver_iam_role_tags = {}

# If using KMS encryption of EBS volumes, provide the KMS Key ARN to be used

# for a policy attachment.

ebs_csi_driver_kms_key_arn = null

# The namespace for the EBS CSI Driver. This will almost always be the

# kube-system namespace.

ebs_csi_driver_namespace = "kube-system"

# The Service Account name to be used with the EBS CSI Driver

ebs_csi_driver_sa_name = "ebs-csi-controller-sa"

# Map of EKS add-ons, where key is name of the add-on and value is a map of

# add-on properties.

eks_addons = {}

# Configuration block with compute configuration for EKS Auto Mode.

eks_auto_mode_compute_config = {"enabled":true,"node_pools":["general-purpose","system"]}

# Whether or not to create an IAM Role for the EKS Worker Nodes when using EKS

# Auto Mode. If using the built-in NodePools for EKS Auto Mode you must either

# provide an IAM Role ARN for `eks_auto_mode_compute_config.node_role_arn` or

# set this to true to automatically create one.

eks_auto_mode_create_node_iam_role = true

# Configuration block with elastic load balancing configuration for the

# cluster.

eks_auto_mode_elastic_load_balancing_config = {}

# Whether or not to enable EKS Auto Mode.

eks_auto_mode_enabled = false

# Description of the EKS Auto Mode Node IAM Role.

eks_auto_mode_iam_role_description = null

# IAM Role Name to for the EKS Auto Mode Node IAM Role. If this is not set a

# default name will be provided in the form of

# `<var.cluster_name-eks-auto-mode-role>`

eks_auto_mode_iam_role_name = null

# The IAM Role Path for the EKS Auto Mode Node IAM Role.

eks_auto_mode_iam_role_path = null

# Permissions Boundary ARN to be used with the EKS Auto Mode Node IAM Role.

eks_auto_mode_iam_role_permissions_boundary = null

# Whether or not to use `eks_auto_mode_iam_role_name` as a prefix for the EKS

# Auto Mode Node IAM Role Name.

eks_auto_mode_iam_role_use_name_prefix = true

# Configuration block with storage configuration for EKS Auto Mode.

eks_auto_mode_storage_config = {}

# A map of custom tags to apply to the EKS Cluster Cluster Creator Access

# Entry. The key is the tag name and the value is the tag value.

eks_cluster_creator_access_entry_tags = {}

# A map of custom tags to apply to the EKS Cluster IAM Role. The key is the

# tag name and the value is the tag value.

eks_cluster_iam_role_tags = {}

# A map of custom tags to apply to the EKS Cluster OIDC Provider. The key is

# the tag name and the value is the tag value.

eks_cluster_oidc_tags = {}

# A map of custom tags to apply to the Security Group for the EKS Cluster

# Control Plane. The key is the tag name and the value is the tag value.

eks_cluster_security_group_tags = {}

# A map of custom tags to apply to the EKS Cluster Control Plane. The key is

# the tag name and the value is the tag value.

eks_cluster_tags = {}

# A map of custom tags to apply to the Control Plane Services Fargate Profile

# IAM Role for this EKS Cluster if enabled. The key is the tag name and the

# value is the tag value.

eks_fargate_profile_iam_role_tags = {}

# A map of custom tags to apply to the Control Plane Services Fargate Profile

# for this EKS Cluster if enabled. The key is the tag name and the value is

# the tag value.

eks_fargate_profile_tags = {}

# If set to true, installs the aws-auth-merger to manage the aws-auth

# configuration. When true, requires setting the var.aws_auth_merger_image

# variable.

enable_aws_auth_merger = false

# When true, deploy the aws-auth-merger into Fargate. It is recommended to run

# the aws-auth-merger on Fargate to avoid chicken and egg issues between the

# aws-auth-merger and having an authenticated worker pool.

enable_aws_auth_merger_fargate = true

# Set to true to enable several basic CloudWatch alarms around CPU usage,

# memory usage, and disk space usage. If set to true, make sure to specify SNS

# topics to send notifications to using var.alarms_sns_topic_arn.

enable_cloudwatch_alarms = true

# Set to true to add IAM permissions to send custom metrics to CloudWatch.

# This is useful in combination with

# https://github.com/gruntwork-io/terraform-aws-monitoring/tree/master/modules/agents/cloudwatch-agent

# to get memory and disk metrics in CloudWatch for your Bastion host.

enable_cloudwatch_metrics = true

# When set to true, the module configures and install the EBS CSI Driver as an

# EKS managed AddOn

# (https://docs.aws.amazon.com/eks/latest/userguide/managing-ebs-csi.html).

enable_ebs_csi_driver = false

# When set to true, the module configures EKS add-ons

# (https://docs.aws.amazon.com/eks/latest/userguide/eks-add-ons.html)

# specified with `eks_addons`. VPC CNI configurations with

# `use_vpc_cni_customize_script` isn't fully supported with addons, as the

# automated add-on lifecycles could potentially undo the configuration

# changes.

enable_eks_addons = false

# Enable fail2ban to block brute force log in attempts. Defaults to true.

enable_fail2ban = true

# Set to true to send worker system logs to CloudWatch. This is useful in

# combination with

# https://github.com/gruntwork-io/terraform-aws-monitoring/tree/master/modules/logs/cloudwatch-log-aggregation-scripts

# to do log aggregation in CloudWatch. Note that this is only recommended for

# aggregating system level logs from the server instances. Container logs

# should be managed through fluent-bit deployed with eks-core-services.

enable_worker_cloudwatch_log_aggregation = false

# A list of the desired control plane logging to enable. See

# https://docs.aws.amazon.com/eks/latest/userguide/control-plane-logs.html for

# the list of available logs.

enabled_control_plane_log_types = ["api","audit","authenticator"]

# Whether or not to enable public API endpoints which allow access to the

# Kubernetes API from outside of the VPC. Note that private access within the

# VPC is always enabled.

endpoint_public_access = true

# If you are using ssh-grunt and your IAM users / groups are defined in a

# separate AWS account, you can use this variable to specify the ARN of an IAM

# role that ssh-grunt can assume to retrieve IAM group and public SSH key info

# from that account. To omit this variable, set it to an empty string (do NOT

# use null, or Terraform will complain).

external_account_ssh_grunt_role_arn = ""

# List of ARNs of AWS IAM roles corresponding to Fargate Profiles that should

# be mapped as Kubernetes Nodes.

fargate_profile_executor_iam_role_arns_for_k8s_role_mapping = []

# A list of availability zones in the region that we CANNOT use to deploy the

# EKS Fargate workers. You can use this to avoid availability zones that may

# not be able to provision the resources (e.g ran out of capacity). If empty,

# will allow all availability zones.

fargate_worker_disallowed_availability_zones = ["us-east-1d","us-east-1e","ca-central-1d"]

# The period, in seconds, over which to measure the CPU utilization percentage

# for the ASG.

high_worker_cpu_utilization_period = 60

# Trigger an alarm if the ASG has an average cluster CPU utilization

# percentage above this threshold.

high_worker_cpu_utilization_threshold = 90

# Sets how this alarm should handle entering the INSUFFICIENT_DATA state.

# Based on

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html#alarms-and-missing-data.

# Must be one of: 'missing', 'ignore', 'breaching' or 'notBreaching'.

high_worker_cpu_utilization_treat_missing_data = "missing"

# The period, in seconds, over which to measure the root disk utilization

# percentage for the ASG.

high_worker_disk_utilization_period = 60

# Trigger an alarm if the ASG has an average cluster root disk utilization

# percentage above this threshold.

high_worker_disk_utilization_threshold = 90

# Sets how this alarm should handle entering the INSUFFICIENT_DATA state.

# Based on

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html#alarms-and-missing-data.

# Must be one of: 'missing', 'ignore', 'breaching' or 'notBreaching'.

high_worker_disk_utilization_treat_missing_data = "missing"

# The period, in seconds, over which to measure the Memory utilization

# percentage for the ASG.

high_worker_memory_utilization_period = 60

# Trigger an alarm if the ASG has an average cluster Memory utilization

# percentage above this threshold.

high_worker_memory_utilization_threshold = 90

# Sets how this alarm should handle entering the INSUFFICIENT_DATA state.

# Based on

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html#alarms-and-missing-data.

# Must be one of: 'missing', 'ignore', 'breaching' or 'notBreaching'.

high_worker_memory_utilization_treat_missing_data = "missing"

# Mapping of IAM role ARNs to Kubernetes RBAC groups that grant permissions to

# the user.

iam_role_to_rbac_group_mapping = {}

# Mapping of IAM user ARNs to Kubernetes RBAC groups that grant permissions to

# the user.

iam_user_to_rbac_group_mapping = {}

# The URL from which to download Kubergrunt if it's not installed already. Use

# to specify a version of kubergrunt that is compatible with your specified

# kubernetes version. Ex.

# 'https://github.com/gruntwork-io/kubergrunt/releases/download/v0.17.3/kubergrunt_<platform>'

kubergrunt_download_url = "https://github.com/gruntwork-io/kubergrunt/releases/download/v0.17.3/kubergrunt_<platform>"

# Version of Kubernetes to use. Refer to EKS docs for list of available

# versions

# (https://docs.aws.amazon.com/eks/latest/userguide/platform-versions.html).

kubernetes_version = "1.32"

# Configure one or more Node Groups to manage the EC2 instances in this

# cluster. Set to empty object ({}) if you do not wish to configure managed

# node groups.

managed_node_group_configurations = {}

# Default value for capacity_type field of managed_node_group_configurations.

node_group_default_capacity_type = "ON_DEMAND"

# Default value for desired_size field of managed_node_group_configurations.

node_group_default_desired_size = 1

# Default value for enable_detailed_monitoring field of

# managed_node_group_configurations.

node_group_default_enable_detailed_monitoring = true

# Default value for http_put_response_hop_limit field of

# managed_node_group_configurations. Any map entry that does not specify

# http_put_response_hop_limit will use this value.

node_group_default_http_put_response_hop_limit = null

# Default value for the instance_root_volume_encryption field of

# managed_node_group_configurations.

node_group_default_instance_root_volume_encryption = true

# Default value for the instance_root_volume_size field of

# managed_node_group_configurations.

node_group_default_instance_root_volume_size = 40

# Default value for the instance_root_volume_type field of

# managed_node_group_configurations.

node_group_default_instance_root_volume_type = "gp3"

# Default value for instance_types field of managed_node_group_configurations.

node_group_default_instance_types = null

# Default value for labels field of managed_node_group_configurations. Unlike

# common_labels which will always be merged in, these labels are only used if

# the labels field is omitted from the configuration.

node_group_default_labels = {}

# Default value for the max_pods_allowed field of

# managed_node_group_configurations. Any map entry that does not specify

# max_pods_allowed will use this value.

node_group_default_max_pods_allowed = null

# Default value for max_size field of managed_node_group_configurations.

node_group_default_max_size = 1

# Default value for min_size field of managed_node_group_configurations.

node_group_default_min_size = 1

# Default value for subnet_ids field of managed_node_group_configurations.

node_group_default_subnet_ids = null

# Default value for tags field of managed_node_group_configurations. Unlike

# common_tags which will always be merged in, these tags are only used if the

# tags field is omitted from the configuration.

node_group_default_tags = {}

# Default value for taint field of node_group_configurations. These taints are

# only used if the taint field is omitted from the configuration.

node_group_default_taints = []

# ARN of a permission boundary to apply on the IAM role created for the

# managed node groups.

node_group_iam_permissions_boundary = null

# The instance type to configure in the launch template. This value will be

# used when the instance_types field is set to null (NOT omitted, in which

# case var.node_group_default_instance_types will be used).

node_group_launch_template_instance_type = null

# Tags assigned to a node group are mirrored to the underlaying autoscaling

# group by default. If you want to disable this behaviour, set this flag to

# false. Note that this assumes that there is a one-to-one mappping between

# ASGs and Node Groups. If there is more than one ASG mapped to the Node

# Group, then this will only apply the tags on the first one. Due to a

# limitation in Terraform for_each where it can not depend on dynamic data, it

# is currently not possible in the module to map the tags to all ASGs. If you

# wish to apply the tags to all underlying ASGs, then it is recommended to

# call the aws_autoscaling_group_tag resource in a separate terraform state

# file outside of this module, or use a two-stage apply process.

node_group_mirror_tags_to_asg = true

# A map of tags to apply to the Security Group of the ASG for the managed node

# group pool. The key is the tag name and the value is the tag value.

node_group_security_group_tags = {}

# A map of custom tags to apply to the EKS Worker IAM Role. The key is the tag

# name and the value is the tag value.

node_group_worker_iam_role_tags = {}

# Number of subnets provided in the var.control_plane_vpc_subnet_ids variable.

# When null (default), this is computed dynamically from the list. This is

# used to workaround terraform limitations where resource count and for_each

# can not depend on dynamic resources (e.g., if you are creating the subnets

# and the EKS cluster in the same module).

num_control_plane_vpc_subnet_ids = null

# Number of subnets provided in the var.worker_vpc_subnet_ids variable. When

# null (default), this is computed dynamically from the list. This is used to

# workaround terraform limitations where resource count and for_each can not

# depend on dynamic resources (e.g., if you are creating the subnets and the

# EKS cluster in the same module).

num_worker_vpc_subnet_ids = null

# When true, configures control plane services to run on Fargate so that the

# cluster can run without worker nodes. If true, requires kubergrunt to be

# available on the system, and create_default_fargate_iam_role be set to true.

schedule_control_plane_services_on_fargate = false

# ARN for KMS Key to use for envelope encryption of Kubernetes Secrets. By

# default Secrets in EKS are encrypted at rest at the EBS layer in the managed

# etcd cluster using shared AWS managed keys. Setting this variable will

# configure Kubernetes to use envelope encryption to encrypt Secrets using

# this KMS key on top of the EBS layer encryption.

secret_envelope_encryption_kms_key_arn = null

# When true, precreate the CloudWatch Log Group to use for EKS control plane

# logging. This is useful if you wish to customize the CloudWatch Log Group

# with various settings such as retention periods and KMS encryption. When

# false, EKS will automatically create a basic log group to use. Note that

# logs are only streamed to this group if var.enabled_cluster_log_types is

# true.

should_create_control_plane_cloudwatch_log_group = true

# If you are using ssh-grunt, this is the name of the IAM group from which

# users will be allowed to SSH to the EKS workers. To omit this variable, set

# it to an empty string (do NOT use null, or Terraform will complain).

ssh_grunt_iam_group = "ssh-grunt-users"

# If you are using ssh-grunt, this is the name of the IAM group from which

# users will be allowed to SSH to the EKS workers with sudo permissions. To

# omit this variable, set it to an empty string (do NOT use null, or Terraform

# will complain).

ssh_grunt_iam_group_sudo = "ssh-grunt-sudo-users"

# The tenancy of this server. Must be one of: default, dedicated, or host.

tenancy = "default"

# When set to true, the sync-core-components command will skip updating

# coredns. This variable is ignored if `use_kubergrunt_sync_components` is

# false.

upgrade_cluster_script_skip_coredns = false

# When set to true, the sync-core-components command will skip updating

# kube-proxy. This variable is ignored if `use_kubergrunt_sync_components` is

# false.

upgrade_cluster_script_skip_kube_proxy = false

# When set to true, the sync-core-components command will skip updating

# aws-vpc-cni. This variable is ignored if `use_kubergrunt_sync_components` is

# false.

upgrade_cluster_script_skip_vpc_cni = false

# When set to true, the sync-core-components command will wait until the new

# versions are rolled out in the cluster. This variable is ignored if

# `use_kubergrunt_sync_components` is false.

upgrade_cluster_script_wait_for_rollout = true

# If this variable is set to true, then use an exec-based plugin to

# authenticate and fetch tokens for EKS. This is useful because EKS clusters

# use short-lived authentication tokens that can expire in the middle of an

# 'apply' or 'destroy', and since the native Kubernetes provider in Terraform

# doesn't have a way to fetch up-to-date tokens, we recommend using an

# exec-based provider as a workaround. Use the use_kubergrunt_to_fetch_token

# input variable to control whether kubergrunt or aws is used to fetch tokens.

use_exec_plugin_for_auth = true

# Set this variable to true to enable the use of Instance Metadata Service

# Version 1 in this module's aws_launch_template. Note that while IMDsv2 is

# preferred due to its special security hardening, we allow this in order to

# support the use case of AMIs built outside of these modules that depend on

# IMDSv1.

use_imdsv1 = false

# When set to true, this will enable kubergrunt based component syncing. This

# step ensures that the core EKS components that are installed are upgraded to

# a matching version everytime the cluster's Kubernetes version is updated.

use_kubergrunt_sync_components = true

# EKS clusters use short-lived authentication tokens that can expire in the

# middle of an 'apply' or 'destroy'. To avoid this issue, we use an exec-based

# plugin to fetch an up-to-date token. If this variable is set to true, we'll

# use kubergrunt to fetch the token (in which case, kubergrunt must be

# installed and on PATH); if this variable is set to false, we'll use the aws

# CLI to fetch the token (in which case, aws must be installed and on PATH).

# Note this functionality is only enabled if use_exec_plugin_for_auth is set

# to true.

use_kubergrunt_to_fetch_token = true

# When set to true, this will enable kubergrunt verification to wait for the

# Kubernetes API server to come up before completing. If false, reverts to a

# 30 second timed wait instead.

use_kubergrunt_verification = true

# When true, all IAM policies will be managed as dedicated policies rather

# than inline policies attached to the IAM roles. Dedicated managed policies

# are friendlier to automated policy checkers, which may scan a single

# resource for findings. As such, it is important to avoid inline policies

# when targeting compliance with various security standards.

use_managed_iam_policies = true

# When set to true, this will enable management of the aws-vpc-cni

# configuration options using kubergrunt running as a local-exec provisioner.

# If you set this to false, the vpc_cni_* variables will be ignored.

use_vpc_cni_customize_script = true

# When true, enable prefix delegation mode for the AWS VPC CNI component of

# the EKS cluster. In prefix delegation mode, each ENI will be allocated 16 IP

# addresses (/28) instead of 1, allowing you to pack more Pods per node. Note

# that by default, AWS VPC CNI will always preallocate 1 full prefix - this

# means that you can potentially take up 32 IP addresses from the VPC network

# space even if you only have 1 Pod on the node. You can tweak this behavior

# by configuring the var.vpc_cni_warm_ip_target input variable.

vpc_cni_enable_prefix_delegation = true

# The minimum number of IP addresses (free and used) each node should start

# with. When null, defaults to the aws-vpc-cni application setting (currently

# 16 as of version 1.9.0). For example, if this is set to 25, every node will

# allocate 2 prefixes (32 IP addresses). On the other hand, if this was set to

# the default value, then each node will allocate only 1 prefix (16 IP

# addresses).

vpc_cni_minimum_ip_target = null

# The number of free IP addresses each node should maintain. When null,

# defaults to the aws-vpc-cni application setting (currently 16 as of version

# 1.9.0). In prefix delegation mode, determines whether the node will

# preallocate another full prefix. For example, if this is set to 5 and a node

# is currently has 9 Pods scheduled, then the node will NOT preallocate a new

# prefix block of 16 IP addresses. On the other hand, if this was set to the

# default value, then the node will allocate a new block when the first pod is

# scheduled.

vpc_cni_warm_ip_target = null

# The ID (ARN, alias ARN, AWS ID) of a customer managed KMS Key to use for

# encrypting worker system log data. Only used if

# var.enable_worker_cloudwatch_log_aggregation is true.

worker_cloudwatch_log_group_kms_key_id = null

# Name of the CloudWatch Log Group where worker system logs are reported to.

# Only used if var.enable_worker_cloudwatch_log_aggregation is true.

worker_cloudwatch_log_group_name = null

# The number of days to retain log events in the worker system logs log group.

# Refer to

# https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/cloudwatch_log_group#retention_in_days

# for all the valid values. When null, the log events are retained forever.

# Only used if var.enable_worker_cloudwatch_log_aggregation is true.

worker_cloudwatch_log_group_retention_in_days = null

# Tags to apply on the worker system logs CloudWatch Log Group, encoded as a

# map where the keys are tag keys and values are tag values. Only used if

# var.enable_worker_cloudwatch_log_aggregation is true.

worker_cloudwatch_log_group_tags = null

# List of ARNs of AWS IAM roles corresponding to EC2 instances that should be

# mapped as Kubernetes Nodes.

worker_iam_role_arns_for_k8s_role_mapping = []

# Prefix EKS worker resource names with this string. When you have multiple

# worker groups for the cluster, you can use this to namespace the resources.

# Defaults to empty string so that resource names are not excessively long by

# default.

worker_name_prefix = ""

# A list of the subnets into which the EKS Cluster's administrative pods will

# be launched. These should usually be all private subnets and include one in

# each AWS Availability Zone. Required when

# var.schedule_control_plane_services_on_fargate is true.

worker_vpc_subnet_ids = []

}

# ------------------------------------------------------------------------------------------------------

# DEPLOY GRUNTWORK'S EKS-CLUSTER MODULE

# ------------------------------------------------------------------------------------------------------

terraform {

source = "git::git@github.com:gruntwork-io/terraform-aws-service-catalog.git//modules/services/eks-cluster?ref=v0.127.2"

}

inputs = {

# ----------------------------------------------------------------------------------------------------

# REQUIRED VARIABLES

# ----------------------------------------------------------------------------------------------------

# The list of CIDR blocks to allow inbound access to the Kubernetes API.

allow_inbound_api_access_from_cidr_blocks = <list(string)>

# The name of the EKS cluster

cluster_name = <string>

# List of IDs of the subnets that can be used for the EKS Control Plane.

control_plane_vpc_subnet_ids = <list(string)>

# ID of the VPC where the EKS resources will be deployed.

vpc_id = <string>

# ----------------------------------------------------------------------------------------------------

# OPTIONAL VARIABLES

# ----------------------------------------------------------------------------------------------------

# The authentication mode for the cluster. Valid values are CONFIG_MAP, API or

# API_AND_CONFIG_MAP.

access_config_authentication_mode = "CONFIG_MAP"

# Map of EKS Access Entries to be created for the cluster.

access_entries = {}

# Map of EKS Access Entry Policy Associations to be created for the cluster.

access_entry_policy_associations = {}

# A list of additional security group IDs to attach to the control plane.

additional_security_groups_for_control_plane = []

# A list of additional security group IDs to attach to the worker nodes.

additional_security_groups_for_workers = []

# The ARNs of SNS topics where CloudWatch alarms (e.g., for CPU, memory, and

# disk space usage) should send notifications.

alarms_sns_topic_arn = []

# The list of CIDR blocks to allow inbound SSH access to the worker groups.

allow_inbound_ssh_from_cidr_blocks = []

# The list of security group IDs to allow inbound SSH access to the worker

# groups.

allow_inbound_ssh_from_security_groups = []

# The list of CIDR blocks to allow inbound access to the private Kubernetes

# API endpoint (e.g. the endpoint within the VPC, not the public endpoint).

allow_private_api_access_from_cidr_blocks = []

# The list of security groups to allow inbound access to the private

# Kubernetes API endpoint (e.g. the endpoint within the VPC, not the public

# endpoint).

allow_private_api_access_from_security_groups = []

# Default value for enable_detailed_monitoring field of

# autoscaling_group_configurations.

asg_default_enable_detailed_monitoring = true

# Default value for the http_put_response_hop_limit field of

# autoscaling_group_configurations.

asg_default_http_put_response_hop_limit = null

# Default value for the asg_instance_root_volume_encryption field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_encryption will use this value.

asg_default_instance_root_volume_encryption = true

# Default value for the asg_instance_root_volume_iops field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_iops will use this value.

asg_default_instance_root_volume_iops = null

# Default value for the asg_instance_root_volume_size field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_size will use this value.

asg_default_instance_root_volume_size = 40

# Default value for the asg_instance_root_volume_throughput field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_throughput will use this value.

asg_default_instance_root_volume_throughput = null

# Default value for the asg_instance_root_volume_type field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_root_volume_type will use this value.

asg_default_instance_root_volume_type = "standard"

# Default value for the asg_instance_type field of

# autoscaling_group_configurations. Any map entry that does not specify

# asg_instance_type will use this value.

asg_default_instance_type = "t3.medium"

# Default value for the max_pods_allowed field of

# autoscaling_group_configurations. Any map entry that does not specify

# max_pods_allowed will use this value.

asg_default_max_pods_allowed = null

# Default value for the max_size field of autoscaling_group_configurations.

# Any map entry that does not specify max_size will use this value.

asg_default_max_size = 2

# Default value for the min_size field of autoscaling_group_configurations.

# Any map entry that does not specify min_size will use this value.

asg_default_min_size = 1

# Default value for the multi_instance_overrides field of

# autoscaling_group_configurations. Any map entry that does not specify

# multi_instance_overrides will use this value.

asg_default_multi_instance_overrides = []

# Default value for the on_demand_allocation_strategy field of

# autoscaling_group_configurations. Any map entry that does not specify

# on_demand_allocation_strategy will use this value.

asg_default_on_demand_allocation_strategy = null

# Default value for the on_demand_base_capacity field of

# autoscaling_group_configurations. Any map entry that does not specify

# on_demand_base_capacity will use this value.

asg_default_on_demand_base_capacity = null

# Default value for the on_demand_percentage_above_base_capacity field of

# autoscaling_group_configurations. Any map entry that does not specify

# on_demand_percentage_above_base_capacity will use this value.

asg_default_on_demand_percentage_above_base_capacity = null

# Default value for the spot_allocation_strategy field of

# autoscaling_group_configurations. Any map entry that does not specify

# spot_allocation_strategy will use this value.

asg_default_spot_allocation_strategy = null

# Default value for the spot_instance_pools field of

# autoscaling_group_configurations. Any map entry that does not specify

# spot_instance_pools will use this value.

asg_default_spot_instance_pools = null

# Default value for the spot_max_price field of

# autoscaling_group_configurations. Any map entry that does not specify

# spot_max_price will use this value. Set to empty string (default) to mean

# on-demand price.

asg_default_spot_max_price = null

# Default value for the tags field of autoscaling_group_configurations. Any

# map entry that does not specify tags will use this value.

asg_default_tags = []

# Default value for the use_multi_instances_policy field of

# autoscaling_group_configurations. Any map entry that does not specify

# use_multi_instances_policy will use this value.

asg_default_use_multi_instances_policy = false

# Custom name for the IAM instance profile for the Self-managed workers. When

# null, the IAM role name will be used. If var.asg_use_resource_name_prefix is

# true, this will be used as a name prefix.

asg_iam_instance_profile_name = null

# ARN of a permission boundary to apply on the IAM role created for the self

# managed workers.

asg_iam_permissions_boundary = null

# A map of tags to apply to the Security Group of the ASG for the self managed

# worker pool. The key is the tag name and the value is the tag value.

asg_security_group_tags = {}

# When true, all the relevant resources for self managed workers will be set

# to use the name_prefix attribute so that unique names are generated for

# them. This allows those resources to support recreation through

# create_before_destroy lifecycle rules. Set to false if you were using any

# version before 0.65.0 and wish to avoid recreating the entire worker pool on

# your cluster.

asg_use_resource_name_prefix = true

# A map of custom tags to apply to the EKS Worker IAM Policies. The key is the

# tag name and the value is the tag value.

asg_worker_iam_policy_tags = {}

# A map of custom tags to apply to the EKS Worker IAM Role. The key is the tag

# name and the value is the tag value.

asg_worker_iam_role_tags = {}

# A map of custom tags to apply to the EKS Worker IAM Instance Profile. The

# key is the tag name and the value is the tag value.

asg_worker_instance_profile_tags = {}

# A map of custom tags to apply to the Fargate Profile if enabled. The key is

# the tag name and the value is the tag value.

auth_merger_eks_fargate_profile_tags = {}

# Configure one or more Auto Scaling Groups (ASGs) to manage the EC2 instances

# in this cluster. If any of the values are not provided, the specified

# default variable will be used to lookup a default value.

autoscaling_group_configurations = {}

# Adds additional tags to each ASG that allow a cluster autoscaler to

# auto-discover them.

autoscaling_group_include_autoscaler_discovery_tags = true

# Name of the default aws-auth ConfigMap to use. This will be the name of the

# ConfigMap that gets created by this module in the aws-auth-merger namespace

# to seed the initial aws-auth ConfigMap.

aws_auth_merger_default_configmap_name = "main-aws-auth"

# Location of the container image to use for the aws-auth-merger app. You can

# use the Dockerfile provided in terraform-aws-eks to construct an image. See

# https://github.com/gruntwork-io/terraform-aws-eks/blob/master/modules/eks-aws-auth-merger/core-concepts.md#how-do-i-use-the-aws-auth-merger

# for more info.

aws_auth_merger_image = null

# Namespace to deploy the aws-auth-merger into. The app will watch for

# ConfigMaps in this Namespace to merge into the aws-auth ConfigMap.

aws_auth_merger_namespace = "aws-auth-merger"

# Whether or not to bootstrap an access entry with cluster admin permissions

# for the cluster creator.

bootstrap_cluster_creator_admin_permissions = true

# Cloud init scripts to run on the EKS worker nodes when it is booting. See

# the part blocks in

# https://www.terraform.io/docs/providers/template/d/cloudinit_config.html for

# syntax. To override the default boot script installed as part of the module,

# use the key `default`.

cloud_init_parts = {}

# ARN of permissions boundary to apply to the cluster IAM role - the IAM role

# created for the EKS cluster.

cluster_iam_role_permissions_boundary = null

# The AMI to run on each instance in the EKS cluster. You can build the AMI

# using the Packer template eks-node-al2.json. One of var.cluster_instance_ami

# or var.cluster_instance_ami_filters is required. Only used if

# var.cluster_instance_ami_filters is null. Set to null if

# cluster_instance_ami_filters is set.

cluster_instance_ami = null

# Properties on the AMI that can be used to lookup a prebuilt AMI for use with

# self managed workers. You can build the AMI using the Packer template

# eks-node-al2.json. One of var.cluster_instance_ami or

# var.cluster_instance_ami_filters is required. If both are defined,

# var.cluster_instance_ami_filters will be used. Set to null if

# cluster_instance_ami is set.

cluster_instance_ami_filters = null

# Whether or not to associate a public IP address to the instances of the self

# managed ASGs. Will only work if the instances are launched in a public

# subnet.

cluster_instance_associate_public_ip_address = false

# The name of the Key Pair that can be used to SSH to each instance in the EKS

# cluster

cluster_instance_keypair_name = null

# The IP family used to assign Kubernetes pod and service addresses. Valid

# values are ipv4 (default) and ipv6. You can only specify an IP family when

# you create a cluster, changing this value will force a new cluster to be

# created.

cluster_network_config_ip_family = "ipv4"

# The CIDR block to assign Kubernetes pod and service IP addresses from. If

# you don't specify a block, Kubernetes assigns addresses from either the

# 10.100.0.0/16 or 172.20.0.0/16 CIDR blocks. You can only specify a custom

# CIDR block when you create a cluster, changing this value will force a new

# cluster to be created.

cluster_network_config_service_ipv4_cidr = null

# The ID (ARN, alias ARN, AWS ID) of a customer managed KMS Key to use for

# encrypting log data in the CloudWatch log group for EKS control plane logs.

control_plane_cloudwatch_log_group_kms_key_id = null

# The number of days to retain log events in the CloudWatch log group for EKS

# control plane logs. Refer to

# https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/cloudwatch_log_group#retention_in_days

# for all the valid values. When null, the log events are retained forever.

control_plane_cloudwatch_log_group_retention_in_days = null

# Tags to apply on the CloudWatch Log Group for EKS control plane logs,

# encoded as a map where the keys are tag keys and values are tag values.

control_plane_cloudwatch_log_group_tags = null

# A list of availability zones in the region that we CANNOT use to deploy the

# EKS control plane. You can use this to avoid availability zones that may not

# be able to provision the resources (e.g ran out of capacity). If empty, will

# allow all availability zones.

control_plane_disallowed_availability_zones = ["us-east-1e"]

# When true, IAM role will be created and attached to Fargate control plane

# services.

create_default_fargate_iam_role = true

# The name to use for the default Fargate execution IAM role that is created

# when create_default_fargate_iam_role is true. When null, defaults to

# CLUSTER_NAME-fargate-role.

custom_default_fargate_iam_role_name = null

# A map of custom tags to apply to the EKS add-ons. The key is the tag name

# and the value is the tag value.

custom_tags_eks_addons = {}

# A map of unique identifiers to egress security group rules to attach to the

# worker groups.

custom_worker_egress_security_group_rules = {}

# A map of unique identifiers to ingress security group rules to attach to the

# worker groups.

custom_worker_ingress_security_group_rules = {}

# Parameters for the worker cpu usage widget to output for use in a CloudWatch

# dashboard.

dashboard_cpu_usage_widget_parameters = {"height":6,"period":60,"width":8}

# Parameters for the worker disk usage widget to output for use in a

# CloudWatch dashboard.

dashboard_disk_usage_widget_parameters = {"height":6,"period":60,"width":8}

# Parameters for the worker memory usage widget to output for use in a

# CloudWatch dashboard.

dashboard_memory_usage_widget_parameters = {"height":6,"period":60,"width":8}

# A map of default tags to apply to all supported resources in this module.

# These tags will be merged with any other resource specific tags. The key is

# the tag name and the value is the tag value.

default_tags = {}

# Configuraiton object for the EBS CSI Driver EKS AddOn

ebs_csi_driver_addon_config = {}

# A map of custom tags to apply to the EBS CSI Driver AddOn. The key is the

# tag name and the value is the tag value.

ebs_csi_driver_addon_tags = {}

# A map of custom tags to apply to the IAM Policies created for the EBS CSI

# Driver IAM Role if enabled. The key is the tag name and the value is the tag

# value.

ebs_csi_driver_iam_policy_tags = {}

# A map of custom tags to apply to the EBS CSI Driver IAM Role if enabled. The

# key is the tag name and the value is the tag value.

ebs_csi_driver_iam_role_tags = {}

# If using KMS encryption of EBS volumes, provide the KMS Key ARN to be used

# for a policy attachment.

ebs_csi_driver_kms_key_arn = null

# The namespace for the EBS CSI Driver. This will almost always be the

# kube-system namespace.

ebs_csi_driver_namespace = "kube-system"

# The Service Account name to be used with the EBS CSI Driver

ebs_csi_driver_sa_name = "ebs-csi-controller-sa"

# Map of EKS add-ons, where key is name of the add-on and value is a map of

# add-on properties.

eks_addons = {}

# Configuration block with compute configuration for EKS Auto Mode.

eks_auto_mode_compute_config = {"enabled":true,"node_pools":["general-purpose","system"]}

# Whether or not to create an IAM Role for the EKS Worker Nodes when using EKS

# Auto Mode. If using the built-in NodePools for EKS Auto Mode you must either

# provide an IAM Role ARN for `eks_auto_mode_compute_config.node_role_arn` or

# set this to true to automatically create one.

eks_auto_mode_create_node_iam_role = true

# Configuration block with elastic load balancing configuration for the

# cluster.

eks_auto_mode_elastic_load_balancing_config = {}

# Whether or not to enable EKS Auto Mode.

eks_auto_mode_enabled = false

# Description of the EKS Auto Mode Node IAM Role.

eks_auto_mode_iam_role_description = null

# IAM Role Name to for the EKS Auto Mode Node IAM Role. If this is not set a

# default name will be provided in the form of

# `<var.cluster_name-eks-auto-mode-role>`

eks_auto_mode_iam_role_name = null

# The IAM Role Path for the EKS Auto Mode Node IAM Role.

eks_auto_mode_iam_role_path = null

# Permissions Boundary ARN to be used with the EKS Auto Mode Node IAM Role.

eks_auto_mode_iam_role_permissions_boundary = null

# Whether or not to use `eks_auto_mode_iam_role_name` as a prefix for the EKS

# Auto Mode Node IAM Role Name.

eks_auto_mode_iam_role_use_name_prefix = true

# Configuration block with storage configuration for EKS Auto Mode.

eks_auto_mode_storage_config = {}

# A map of custom tags to apply to the EKS Cluster Cluster Creator Access

# Entry. The key is the tag name and the value is the tag value.

eks_cluster_creator_access_entry_tags = {}

# A map of custom tags to apply to the EKS Cluster IAM Role. The key is the

# tag name and the value is the tag value.

eks_cluster_iam_role_tags = {}

# A map of custom tags to apply to the EKS Cluster OIDC Provider. The key is

# the tag name and the value is the tag value.

eks_cluster_oidc_tags = {}

# A map of custom tags to apply to the Security Group for the EKS Cluster

# Control Plane. The key is the tag name and the value is the tag value.

eks_cluster_security_group_tags = {}

# A map of custom tags to apply to the EKS Cluster Control Plane. The key is

# the tag name and the value is the tag value.

eks_cluster_tags = {}

# A map of custom tags to apply to the Control Plane Services Fargate Profile

# IAM Role for this EKS Cluster if enabled. The key is the tag name and the

# value is the tag value.

eks_fargate_profile_iam_role_tags = {}

# A map of custom tags to apply to the Control Plane Services Fargate Profile

# for this EKS Cluster if enabled. The key is the tag name and the value is

# the tag value.

eks_fargate_profile_tags = {}

# If set to true, installs the aws-auth-merger to manage the aws-auth

# configuration. When true, requires setting the var.aws_auth_merger_image

# variable.

enable_aws_auth_merger = false

# When true, deploy the aws-auth-merger into Fargate. It is recommended to run

# the aws-auth-merger on Fargate to avoid chicken and egg issues between the

# aws-auth-merger and having an authenticated worker pool.

enable_aws_auth_merger_fargate = true

# Set to true to enable several basic CloudWatch alarms around CPU usage,

# memory usage, and disk space usage. If set to true, make sure to specify SNS

# topics to send notifications to using var.alarms_sns_topic_arn.

enable_cloudwatch_alarms = true

# Set to true to add IAM permissions to send custom metrics to CloudWatch.

# This is useful in combination with

# https://github.com/gruntwork-io/terraform-aws-monitoring/tree/master/modules/agents/cloudwatch-agent

# to get memory and disk metrics in CloudWatch for your Bastion host.

enable_cloudwatch_metrics = true

# When set to true, the module configures and install the EBS CSI Driver as an

# EKS managed AddOn

# (https://docs.aws.amazon.com/eks/latest/userguide/managing-ebs-csi.html).

enable_ebs_csi_driver = false

# When set to true, the module configures EKS add-ons

# (https://docs.aws.amazon.com/eks/latest/userguide/eks-add-ons.html)

# specified with `eks_addons`. VPC CNI configurations with

# `use_vpc_cni_customize_script` isn't fully supported with addons, as the

# automated add-on lifecycles could potentially undo the configuration

# changes.

enable_eks_addons = false

# Enable fail2ban to block brute force log in attempts. Defaults to true.

enable_fail2ban = true

# Set to true to send worker system logs to CloudWatch. This is useful in

# combination with

# https://github.com/gruntwork-io/terraform-aws-monitoring/tree/master/modules/logs/cloudwatch-log-aggregation-scripts

# to do log aggregation in CloudWatch. Note that this is only recommended for

# aggregating system level logs from the server instances. Container logs

# should be managed through fluent-bit deployed with eks-core-services.

enable_worker_cloudwatch_log_aggregation = false

# A list of the desired control plane logging to enable. See

# https://docs.aws.amazon.com/eks/latest/userguide/control-plane-logs.html for

# the list of available logs.

enabled_control_plane_log_types = ["api","audit","authenticator"]

# Whether or not to enable public API endpoints which allow access to the

# Kubernetes API from outside of the VPC. Note that private access within the

# VPC is always enabled.

endpoint_public_access = true

# If you are using ssh-grunt and your IAM users / groups are defined in a

# separate AWS account, you can use this variable to specify the ARN of an IAM

# role that ssh-grunt can assume to retrieve IAM group and public SSH key info

# from that account. To omit this variable, set it to an empty string (do NOT

# use null, or Terraform will complain).

external_account_ssh_grunt_role_arn = ""

# List of ARNs of AWS IAM roles corresponding to Fargate Profiles that should

# be mapped as Kubernetes Nodes.

fargate_profile_executor_iam_role_arns_for_k8s_role_mapping = []

# A list of availability zones in the region that we CANNOT use to deploy the

# EKS Fargate workers. You can use this to avoid availability zones that may

# not be able to provision the resources (e.g ran out of capacity). If empty,

# will allow all availability zones.

fargate_worker_disallowed_availability_zones = ["us-east-1d","us-east-1e","ca-central-1d"]

# The period, in seconds, over which to measure the CPU utilization percentage

# for the ASG.

high_worker_cpu_utilization_period = 60

# Trigger an alarm if the ASG has an average cluster CPU utilization

# percentage above this threshold.

high_worker_cpu_utilization_threshold = 90

# Sets how this alarm should handle entering the INSUFFICIENT_DATA state.

# Based on

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html#alarms-and-missing-data.

# Must be one of: 'missing', 'ignore', 'breaching' or 'notBreaching'.

high_worker_cpu_utilization_treat_missing_data = "missing"

# The period, in seconds, over which to measure the root disk utilization

# percentage for the ASG.

high_worker_disk_utilization_period = 60

# Trigger an alarm if the ASG has an average cluster root disk utilization

# percentage above this threshold.

high_worker_disk_utilization_threshold = 90

# Sets how this alarm should handle entering the INSUFFICIENT_DATA state.

# Based on

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html#alarms-and-missing-data.

# Must be one of: 'missing', 'ignore', 'breaching' or 'notBreaching'.

high_worker_disk_utilization_treat_missing_data = "missing"

# The period, in seconds, over which to measure the Memory utilization

# percentage for the ASG.

high_worker_memory_utilization_period = 60

# Trigger an alarm if the ASG has an average cluster Memory utilization

# percentage above this threshold.

high_worker_memory_utilization_threshold = 90

# Sets how this alarm should handle entering the INSUFFICIENT_DATA state.

# Based on

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html#alarms-and-missing-data.

# Must be one of: 'missing', 'ignore', 'breaching' or 'notBreaching'.

high_worker_memory_utilization_treat_missing_data = "missing"

# Mapping of IAM role ARNs to Kubernetes RBAC groups that grant permissions to

# the user.

iam_role_to_rbac_group_mapping = {}

# Mapping of IAM user ARNs to Kubernetes RBAC groups that grant permissions to

# the user.

iam_user_to_rbac_group_mapping = {}

# The URL from which to download Kubergrunt if it's not installed already. Use

# to specify a version of kubergrunt that is compatible with your specified

# kubernetes version. Ex.

# 'https://github.com/gruntwork-io/kubergrunt/releases/download/v0.17.3/kubergrunt_<platform>'

kubergrunt_download_url = "https://github.com/gruntwork-io/kubergrunt/releases/download/v0.17.3/kubergrunt_<platform>"

# Version of Kubernetes to use. Refer to EKS docs for list of available

# versions

# (https://docs.aws.amazon.com/eks/latest/userguide/platform-versions.html).

kubernetes_version = "1.32"

# Configure one or more Node Groups to manage the EC2 instances in this

# cluster. Set to empty object ({}) if you do not wish to configure managed

# node groups.

managed_node_group_configurations = {}

# Default value for capacity_type field of managed_node_group_configurations.

node_group_default_capacity_type = "ON_DEMAND"

# Default value for desired_size field of managed_node_group_configurations.

node_group_default_desired_size = 1

# Default value for enable_detailed_monitoring field of

# managed_node_group_configurations.

node_group_default_enable_detailed_monitoring = true

# Default value for http_put_response_hop_limit field of

# managed_node_group_configurations. Any map entry that does not specify

# http_put_response_hop_limit will use this value.

node_group_default_http_put_response_hop_limit = null

# Default value for the instance_root_volume_encryption field of

# managed_node_group_configurations.

node_group_default_instance_root_volume_encryption = true

# Default value for the instance_root_volume_size field of

# managed_node_group_configurations.

node_group_default_instance_root_volume_size = 40

# Default value for the instance_root_volume_type field of

# managed_node_group_configurations.

node_group_default_instance_root_volume_type = "gp3"

# Default value for instance_types field of managed_node_group_configurations.

node_group_default_instance_types = null

# Default value for labels field of managed_node_group_configurations. Unlike

# common_labels which will always be merged in, these labels are only used if

# the labels field is omitted from the configuration.

node_group_default_labels = {}

# Default value for the max_pods_allowed field of

# managed_node_group_configurations. Any map entry that does not specify

# max_pods_allowed will use this value.

node_group_default_max_pods_allowed = null

# Default value for max_size field of managed_node_group_configurations.

node_group_default_max_size = 1

# Default value for min_size field of managed_node_group_configurations.

node_group_default_min_size = 1

# Default value for subnet_ids field of managed_node_group_configurations.

node_group_default_subnet_ids = null

# Default value for tags field of managed_node_group_configurations. Unlike

# common_tags which will always be merged in, these tags are only used if the

# tags field is omitted from the configuration.

node_group_default_tags = {}

# Default value for taint field of node_group_configurations. These taints are

# only used if the taint field is omitted from the configuration.

node_group_default_taints = []

# ARN of a permission boundary to apply on the IAM role created for the

# managed node groups.

node_group_iam_permissions_boundary = null

# The instance type to configure in the launch template. This value will be

# used when the instance_types field is set to null (NOT omitted, in which

# case var.node_group_default_instance_types will be used).

node_group_launch_template_instance_type = null

# Tags assigned to a node group are mirrored to the underlaying autoscaling

# group by default. If you want to disable this behaviour, set this flag to

# false. Note that this assumes that there is a one-to-one mappping between

# ASGs and Node Groups. If there is more than one ASG mapped to the Node

# Group, then this will only apply the tags on the first one. Due to a

# limitation in Terraform for_each where it can not depend on dynamic data, it

# is currently not possible in the module to map the tags to all ASGs. If you

# wish to apply the tags to all underlying ASGs, then it is recommended to

# call the aws_autoscaling_group_tag resource in a separate terraform state

# file outside of this module, or use a two-stage apply process.

node_group_mirror_tags_to_asg = true

# A map of tags to apply to the Security Group of the ASG for the managed node

# group pool. The key is the tag name and the value is the tag value.

node_group_security_group_tags = {}

# A map of custom tags to apply to the EKS Worker IAM Role. The key is the tag

# name and the value is the tag value.

node_group_worker_iam_role_tags = {}

# Number of subnets provided in the var.control_plane_vpc_subnet_ids variable.

# When null (default), this is computed dynamically from the list. This is

# used to workaround terraform limitations where resource count and for_each

# can not depend on dynamic resources (e.g., if you are creating the subnets

# and the EKS cluster in the same module).

num_control_plane_vpc_subnet_ids = null

# Number of subnets provided in the var.worker_vpc_subnet_ids variable. When

# null (default), this is computed dynamically from the list. This is used to

# workaround terraform limitations where resource count and for_each can not

# depend on dynamic resources (e.g., if you are creating the subnets and the

# EKS cluster in the same module).

num_worker_vpc_subnet_ids = null

# When true, configures control plane services to run on Fargate so that the

# cluster can run without worker nodes. If true, requires kubergrunt to be

# available on the system, and create_default_fargate_iam_role be set to true.

schedule_control_plane_services_on_fargate = false

# ARN for KMS Key to use for envelope encryption of Kubernetes Secrets. By

# default Secrets in EKS are encrypted at rest at the EBS layer in the managed

# etcd cluster using shared AWS managed keys. Setting this variable will

# configure Kubernetes to use envelope encryption to encrypt Secrets using

# this KMS key on top of the EBS layer encryption.

secret_envelope_encryption_kms_key_arn = null

# When true, precreate the CloudWatch Log Group to use for EKS control plane

# logging. This is useful if you wish to customize the CloudWatch Log Group

# with various settings such as retention periods and KMS encryption. When

# false, EKS will automatically create a basic log group to use. Note that

# logs are only streamed to this group if var.enabled_cluster_log_types is

# true.

should_create_control_plane_cloudwatch_log_group = true

# If you are using ssh-grunt, this is the name of the IAM group from which

# users will be allowed to SSH to the EKS workers. To omit this variable, set

# it to an empty string (do NOT use null, or Terraform will complain).

ssh_grunt_iam_group = "ssh-grunt-users"

# If you are using ssh-grunt, this is the name of the IAM group from which

# users will be allowed to SSH to the EKS workers with sudo permissions. To

# omit this variable, set it to an empty string (do NOT use null, or Terraform

# will complain).

ssh_grunt_iam_group_sudo = "ssh-grunt-sudo-users"

# The tenancy of this server. Must be one of: default, dedicated, or host.

tenancy = "default"

# When set to true, the sync-core-components command will skip updating